Quote:

Originally Posted by Anthony P

that's a dumb comment obviously we caqn't differentiate stuff that aree microscopic, the question is at what size and distance does it happen.

|

I wasn't talking about anything microscopic. The

page on the Rayleigh Criterion shows the theoretical resolution limit for 500 nm light (green) and a pupil opening of 5 mm would be 1.22 * 10^-4 radians, which is only slightly smaller than the empirical resolution limit they quote for "most acute vision, optimum circumstances" (i.e. people with better than 20/20 vision in ideal light) of 2 * 10^-4 radians, whereas the chart was based on the assumption of 20/20 vision which is a resolution of 2.9 * 10^-4 radians. So if you have better than 20/20 vision you may be able to shift the lines in that chart by a factor of 1.45 (i.e. getting the "full benefit" of 2K or 4K at 1.45 the distance they show there, for a given screen size), and in theory if there were no issues with aberration or with imperfections in the human lens it might be improved by up to a factor of 2.38, but that's the theoretical upper limit.

Quote:

Originally Posted by Anthony P

again you prove you don't understand anything and just pretending to have an idea of the discussion.

film grain comes from the world of film (as in that cellulose strip). film is cellulose with a coating on it, at the microscopic molecular level that light sensitive coating is uneven and so at the molecular level reacts differently to light in different areas. Now if it was that simple we would not be talking film grain but when projected that film is magnified many times and so what is microscopic becomes big enough to see and called film grain. Now when scanning a film that texture (film grain) will be a fraction of a pixel and depending on the scan algorithm and what is in the image will become pixel size. Unless we are discussing very rare random chance digitalized film grain will never be larger than a pixel. Since the scanner won't read the same anomaly in two spots. We would need a lot higher resolution for a single film grain to be larger than a pixel.

|

I didn't think of it in terms of how large the actual film grain would be, I was just thinking of the fact that you often

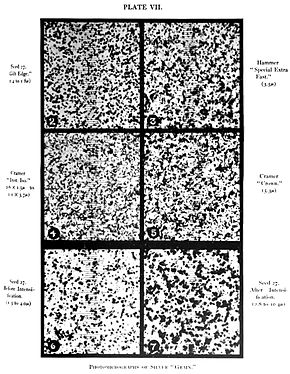

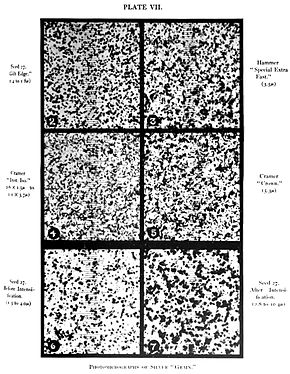

can see grain on blu rays, so obviously the blu ray is representing the grain as at least the size of a pixel and possibly larger. You're right that the real grain on the film is smaller than the scale of a pixel on a 2K screen (at least in the case of 35mm film), but for whatever reason the process of transferring it seems to make the grain visible at a scale larger than the size of individual grains. Maybe it's possible this is again an effect of diffraction smearing out small details with the lens and aperture of whatever scanner they're using on the film, I dunno. Another possibility is that what you are actually seeing in grainy blu rays is clumps of grains rather than individual grains, since there does seem to be some clumping in the top image of wikipedia's

film grain article:

Quote:

Originally Posted by Anthony P

you are missing the point and doing the same mistake as the idiots with the charts.

|

Does "missing the point" mean you actually disagree with my point that you could see a smeared-out white spot even in cases where the pixel size is too small for you to see at the distance you're viewing the screen? Or do you agree that's true, but think your test is nevertheless a good one?

Quote:

Originally Posted by Anthony P

first let me say that the white and black is for three reasons

1) easier to discuss a specific (and if someone would rather have blue with a red dot they can do the same test)

2) some people are more or less colour blind so going with something other than black and white could create an issue (i.e. the guy can't see the pixel at any distance)

3) people are much better at seeing differences in luma than chroma which is why in every system there is emphasis in brightness than colour

Now let me ask you this

you are looking at the screen and all you see is black. Does the white pixel make a difference in what you see? no, so who cares if it is there.

You are looking at the screen and you see the white pixel on the black. Does the white pixel make a difference in what you see? yes since you see it. It would be wrong to say it does not make a difference to the image.

|

Obviously if you can see a white spot, then that means there is a visible difference between an all-black screen and a black screen with a single white pixel. But we were talking about 2K vs. 4K, weren't we? If you have a single white pixel on a 2K display and a single white pixel on a 4K display (with the 4K pixel a little brighter so both are sending the same total amount of light to your eyes despite the difference in size), and in both cases diffraction is visually smearing them out to the same angular size which is even larger than the angular size of a 2K pixel at the distance you're sitting, do you think

in that case you'd be able to tell the difference between these two cases? If not, then the fact that you can see the light from a single pixel at 4K isn't a good test of whether the 4K screen will look any different than the 2K screen at this distance.

Quote:

Originally Posted by Anthony P

for example

if we assume (the green and red lines are to differentiate pixels) in 4k you have image #1 (a white pixel surrounded by black ones) than in 2K it would be something like a, b or c that is shown on the screen depending on how that pixel is created. Obviously if you can see from your seat the white pixel in 4k represented by image #1 neither a, b nor c will look like #1 no matter how much you believe that pixel in #1 will be distorted. |

And again, if in both cases you were far enough away that diffraction was smearing out the lighter pixel to an angular size larger than that of a 2K pixel, then as long as the total amount of light your eyes were receiving in both cases was the same, you wouldn't see a difference. If your eye was replaced by a camera with a circular aperture the same size as your pupil, then even if the sensor behind the lens was ultra high def it would just record a smeared-out diffraction pattern like this in both cases:

Quote:

Originally Posted by Anthony P

Not at all. Now I did not bring up compression artifacts for this reason (i.e. it was meant as an example at things that are visible but might go unnoticed) . Burt you are wrong. Let's go with a simple example, an image has a gradient (i.e. moving slowly from one colour to an other) but because of way over compression you get banding. So, for example moving from white to black with the 2k compression you end up with a band of white, 20% grey, 40% grey, 60% grey, 80% grey, black.

|

But if that's because of "way over compression", isn't that the same as saying that it's an example of a poor choice of compression algorithm? A good compression algorithm should be able to display an even gradient where each pixel varies slightly from the next, since this is a nice regular pattern that is highly compressible (i.e. you can write a short program to generate a pattern like this without having to separately store the shade of each and every pixel using different bits in memory). You didn't answer my question earlier about whether you thought that the effects of compression would be apparent even when the compression was done in the most skilled way, as opposed to ineptly:

Quote:

|

If you've seen uncompressed video converted to a blu ray and noticed a difference, are you sure the person doing the conversion was skilled at choosing the best compression algorithms, and encoding everything on the disc to fill as much available space as possible? For really high-quality blu rays like those in the "mastered in 4K" series, I wonder if there would really be much visible difference between an uncompressed 2K file produced from the 4K master, and what is actually seen on the blu ray.

|

Threaded Mode

Threaded Mode